The Growing Digital Divide

Given the pace of technological change in the last 40 years and the ageing population, it is no surprise that the digital divide has widened between the capable early adopters and the less capable laggards. Laggards, not by choice, but by circumstance. Age, socioeconomic grouping and cost of living have taken their toll on this. However, another factor lurking below the headlines is also the impact the technology sector itself has played. Unwilling to innovate, design and build for underserved minority groups of society such as the unskilled, underfunded or disadvantaged, these consumers continue to get a raw deal from technology companies.

Government and AI: A Balancing Act

Technology companies have a chance to put this right with AI. But governments who missed the trick to protect these groups of society with the rapid advancement of social media, are now scrambling to legislate the growth of AI from the start. But their best intentions, the speed at which legislation is being brought in, and the speed of AI advancement are not compatible. This could possibly backfire, disadvantaging more of society and accelerating the digital divide.

Public Awareness and Adoption

A recent study showed that a sizable minority of the public – between 19% of the online population in Japan and the US and 30% in the UK – have not heard of any of the most popular AI tools (including ChatGPT). This is despite nearly two years of hype, policy conversations, and extensive media coverage. However, for those who have heard of it, adoption is still slow amongst the majority. Many of those who say they have used Generative AI have used it just once or twice. It has yet to become part of people’s routine internet use. So why is this? What is the barrier, beyond behaviour that might be holding society back?

30% of the online population in the UK – have not heard of any of the most popular AI tools (including ChatGPT)

The Reuters Institute and the University of Oxford

Patterns of AI Adoption

The same study identified patterns of AI adoption, similar to technology innovations of the past. Use of ChatGPT is slightly more common among men and those with higher levels of formal education, but the biggest differences are by age group, with younger people much more likely to have ever used it and to use it regularly. Averaging across all six countries in the study, only 16% of those aged 55 and over say they have used ChatGPT at least once, compared to 56% of 18–24s. But even among the younger age group, infrequent use is still the norm, with just over half of users saying they use it monthly or less.

So how do we make Gen AI more inclusive?

Making Gen AI More Inclusive

Let's take the elderly as an example. In the heart of the digital age, many elderly individuals already struggle with the technology required for cashless payments, leading to difficulties in accessing goods and services. Older adults have concerns about the security of digital payments, preferring the tangibility and perceived safety of cash.

A quiet revolution is unfolding. Generative AI, the latest frontier in artificial intelligence, is rapidly permeating society, transforming how we work, communicate, and learn. It’s a force of change, a catalyst for innovation, and a beacon of progress. But as this technology becomes more entrenched in our daily lives, it raises a critical question. Will the older generations be left in its wake, or can they too ride the wave of this digital tsunami?

The Digital Literacy Gap

At present, new skills like prompt engineering and AI literacy stand in their way. These skills are fast becoming increasingly sought after, there’s a palpable fear that the elderly may find themselves on the fringes of society. The digital divide is not a new concept, but Generative AI threatens to widen this chasm. Creating a generational gap that’s not just about age but about the ability to engage with and understand emerging technologies.

The Societal Impact of Exclusion

The impact of this exclusion is multifaceted. Socially, it can lead to isolation and a diminished sense of belonging among the elderly. Economically, it can exacerbate unemployment and underemployment in this demographic, who may struggle to adapt to AI-driven workplaces. The distribution of wealth, already a contentious issue, could see further skewing as AI proficiency becomes a determinant of economic success.

Embracing Inclusivity in AI

To mitigate these effects, society needs to embrace inclusivity in technology. This means accessible AI education for all ages, user-friendly design that considers the accessibility and cognitive needs of the elderly, and policies that encourage lifelong learning and adaptation.

Key academic thoughts on these questions are illuminating. Researchers argue for ‘Generational AI,’ as a concept that emphasizes the inclusion of the elderly in the digital narrative. Studies suggest that when designed with empathy and inclusivity, AI can empower rather than exclude. Offering tools that compensate for age-related decline and can provide platforms for the elderly to contribute their vast knowledge and experience.

But if we don’t get this right, the welfare system, already under strain, faces a daunting challenge. Supporting an ageing population that’s at risk of being marginalized by technology requires not just financial aid but a structural rethinking of social support. It calls for innovative programs that integrate the elderly into the digital economy. Perhaps as mentors, consultants, or educators, leveraging their expertise in a world that’s learning to coexist with AI.

The Future of AI and Society

As Generative AI continues to shape our world, we must consider its broader implications. It’s not just about the technology itself but about the society we aspire to be—a society that values all its members, regardless of age, and sees the advancement of AI not as a barrier but as a bridge to a more inclusive future.

Principles for Inclusive AI

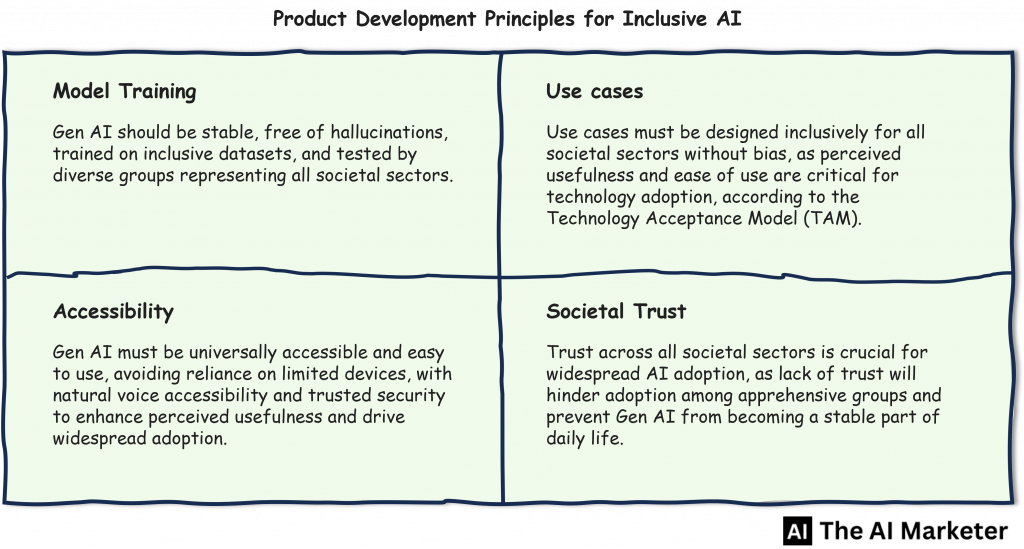

For all these reasons, there is now a dependency on tech companies to get this right. Gen AI with its natural language interface has the potential to be one of the fastest adopted and powerful technologies ever, partly due to it being one of the most accessible technologies ever for the same reason. Big tech needs to better apply the following four principles in the development and go to market of Gen AI which in some cases required a new way of thinking amongst them.

Model Training

Gen AI needs to be stable and free of hallucinations. It should be trained using the most inclusive datasets possible and tested by inclusive groups representing all sectors of society. The collective power of diverse data sets and diverse humans overseeing it.

Use cases

These must reflect inclusiveness beyond the core mass market. I.e. it must be designed and built with all sectors of society in mind. Not be built with a bias. Perceived usefulness is one of the main factors in the widely used theoretical framework The Technology Acceptance Model (TAM). Originally proposed by Fred Davis in the late 1980s and expanded upon by later researchers, TAM references that perceived usefulness and perceived ease of use are key determinants of user acceptance and adoption of technology.

Accessibility

AI must be made easy to use. Ubiquitous, unrestricted access, and unlimited by form of engagement. Otherwise, it will not achieve cross-society adoption. Any reliance on a limited range of devices will stagnate adoption. Gen AI with natural voice accessibility has the potential for universal access.

However, with ubiquitous access comes perceived barriers to adoption such as acceptance and trust of its security credentials. Consumers will also have a subjective perception that using a particular technology will enhance their job performance or productivity. The more useful a user perceives a technology to be, the more likely they are to use it. This is why designing technology for all is pivotal when it comes to adoption. With the potential to have a global influence over how we live our lives from this day onwards, the table stakes are high.

Societal Trust

This may be the biggest barrier. It requires that all sectors of society must trust AI if it is to be adopted widely and deliver to its full potential. All sections of society need to trust the technology for their ‘adoption-driving’ use case. Without that, adoption amongst apprehensive sectors of society will not occur. And despite a growth in ‘usefulness’, Gen AI will not become a stable aspect of everyday life for all.

Legislative Challenges and Opportunities

However, a new barrier is looming, threatening to counteract such approaches. It is a legislative barrier that big tech needs to overcome. The European Union will soon introduce the Digital Markets Act. This Act alone has the potential to restrict access to AI for millions of EU residents. In doing so, it creates a new dimension in the digital divide, that of geography. Apple will delay the European launch of Apple Intelligence features in the EU because of regulatory uncertainties.

The EU Digital Markets Act forces large digital platforms to legally share data with others to help local start-ups better compete with Big Tech companies. With much of Apple’s AI processing occurring on the device to protect consumer privacy, this is incompatible with this new European legislation. This is despite the EU having some of the toughest consumer privacy laws in the world.

It seems if you are a big US Tech company, you just can’t satisfy EU legislators and it is a ‘dammed if you do, dammed if you don’t’ situation. Other companies are following Apple. OpenAI has withheld recent feature enhancements. Meta has also said it would not launch its latest AI models in Europe. European legislators are too siloed in their legislative approach. Making it easier for start-ups to access data will decelerate the adoption of AI in Europe which could cost businesses and economies billions.

The Path Forward

So, will AI become another lost opportunity to narrow the digital divide? Or can big tech companies and governments work together to create the absolute best future for citizens around the globe? A future where privacy, competitiveness and consumer protection work together, not separately. Those of you working in tech companies could do worse than try and steer your companies to the principles set above. Those of you setting legislation need greater balance, less silos, and ultimately ask yourself the question, ‘Is this in the best all-round interest of the consumer?’

What AI was used for this post?

- Chat GPT was used to fact-check and research this article.

Data Privacy in AI: Navigating Marketing Ethics

Navigate the balance between effective AI marketing and ethical data privacy practices