First-party data is more prevalent and easily accessed than ever before. Organisations hold vast data lakes of this data. Whilst, at the same time, Google is encouraging websites to collect more first-party data so that they can continue to target users with relevant ads without relying on third-party cookies. The growing use of AI has now thrust consumer data privacy back into the limelight. As marketers rapidly adopt the use of AI, the importance of ‘data privacy in AI’ becomes increasingly paramount.

AI is remarkably powerful. However, it isn’t just a case of what goes in could come out. The ability of AI to analyse and consolidate data can make customer data more accessible. This can be used for both beneficial and harmful purposes. From pattern recognition to user identification, there are many privacy concerns that AI could infer sensitive information, such as political allegiance, sexuality, preferences, and other habits. This poses the risk of unauthorised data use.

As AI adoption grows, the industry is placing a heightened focus on ethical considerations. Marketers are actively engaging in discussions around responsible AI use, ensuring transparency, fairness, and accountability in their AI-powered initiatives. It is reported that three-quarters of companies are delaying AI due to ethics concerns. There will be impatient marketers who could choose to ask for forgiveness, not for permission. This will carry unknown risks for those marketers.

When asked about ethical issues around the data used in AI marketing operations, 77.5% of firms have been forced to delay implementation of AI and automation due to concerns about bias and fairness, with 32.7% saying delays have been significant.

marketingtechnews.net

Privacy laws such as GDPR (General Data Protection Regulation), CCPA (California Consumer Privacy Act) and the ePrivacy Directive were all drafted and legislated before AI changed our lives, a change that has occurred incredibly fast. The speed of innovation, adoption and lack of specific legislation surrounding the use of AI could lead to erosion of customer privacy, increased data breaches and system biases on a scale never seen before, especially as much of the data usage is covert. So what should marketers and businesses be aware of when using customer data with AI tools and Large Language Models (LLMs)? What is best practice and what does the future hold?

How does existing privacy legislation legislate

In Europe, GDPR governs the processing of personal data, emphasising individuals’ rights and imposing obligations on organisations to ensure the lawful, transparent, and secure handling of such data. GDPR outlines specific principles for the lawful processing of personal data. Across the pond, the leading privacy legislation the CCPA shares many similarities with GDPR in that respect, whilst also focusing more on providing consumers with control over their data, emphasising transparency about data collection and the purpose for which it is used.

The ePrivacy Directive, which existed before either the GDPR or CCPA and which is part of the EU’s legal framework concerning data privacy and electronic communications, primarily addresses the confidentiality of electronic communications and the rules related to the tracking and monitoring of internet users. Therefore, the use of AI or LLMs with customer data, even under the ePrivacy Directive, would still need to comply with the broader principles of EU data protection law, notably the General Data Protection Regulation (GDPR).

What provision does existing privacy legislation make for advances in AI

In the context of AI and LLMs, special attention must be paid to the principles of transparency, data minimisation, and accountability. AI systems often process large amounts of data, and their decision-making processes can be complex and not easily understandable. This poses challenges for transparency and accountability. Additionally, the GDPR’s provisions on automated decision-making and profiling may be relevant if AI or LLMs are used in these contexts. Forrester predicts Gen AI will lead to breaches and fines in 2024.

Organisations using AI or LLMs to process customer data must ensure that they have a lawful basis for processing the data, such as consent or legitimate interest, and must comply with all other GDPR obligations. They must also consider the rights of data subjects, including the right to information, the right to access, and the right to object to automated decision-making.

GDPR Requirements for Processing Personal Data

The GDPR sets out key principles and requirements for processing personal data, which include:

- Lawfulness, fairness, and transparency: Data processing should be lawful, fair, and transparent to the data subject. Therefore, AI systems must have a lawful basis for processing personal data.

- Purpose limitation: Data should be collected for specified, explicit, and legitimate purposes and not further processed in a manner that is incompatible with those purposes. This could include obtaining the explicit consent of individuals, fulfilling a contractual obligation, complying with a legal obligation, protecting vital interests, performing a task carried out in the public interest or the exercise of official authority, or pursuing legitimate interests (provided they do not override the interests and fundamental rights of the data subjects).

- Data minimisation: Only data that is necessary for the purposes for which it is processed should be collected. AI systems should not collect or process more data than is required for their specified tasks.

- Transparency and Explainability: GDPR emphasises transparency in data processing. Individuals have the right to be informed about the processing of their data, including when AI is involved. Moreover, if automated decision-making, including profiling, is used, individuals have the right to meaningful information about the logic, significance, and consequences of such processing.

- Automated Decision-Making and Profiling: GDPR provides individuals with the right not to be subject to a decision based solely on automated processing, including profiling, if it produces legally binding or similarly significant effects. Exceptions exist, such as when the decision is necessary for entering into or performing a contract, authorised by law, or based on the individual’s explicit consent.

- Security Measures: GDPR requires organisations to implement appropriate technical and organisational measures to ensure the security of personal data. This is particularly relevant when AI systems are involved, as they may pose specific risks to data security. Data should be accurate, kept up to date, kept in a form which permits identification of data subjects for no longer than necessary, and processed in a manner that ensures appropriate security

- Accountability: The data controller is responsible for, and must be able to demonstrate, compliance with all these principles.

Data privacy in AI: Uncovering the existing gaps in legislation for consumer protection

The biggest black hole when it comes to data privacy in AI and customer data must be the use of said data in public LLMs. Right now, these public LLMs are powering a shedload of AI tools and capabilities as marketers, giddy with the power AI marketing tools can deliver, test, trial and apply these tools without caution. AI in the form of Gen AI has only been in commercial use since late 2022. Education and understanding of how these platforms work are low and we have rapidly seen the use of Gen AI cross the chasm from earlier adopters to mainstream practitioners. The ease of access to the likes of Chat GPT and Bard, the eagerness to convert these powerful tools into competitive advantage and the encouragement of leadership could easily be a recipe for blowing caution to the wind.

The danger exists covertly, not immediately traceable back to employee actions. After all, what audit trail exists should a marketer, looking for the answer to a new and clever way of segmenting the customer base, enter customer data from a CRM? Chat GPT can do this in seconds surely!

In this scenario, how can an organisation even show a consumer what customer data might have been consumed into an AI tool or LLM? Where is the audit trail to demonstrate how that has gone on to train the models that exist beyond the corporate walls of your organisation? Before you know it, you have customer data being ingested into LLMs, being processed to further enhance the model. And how could this even be subsequently reversed?

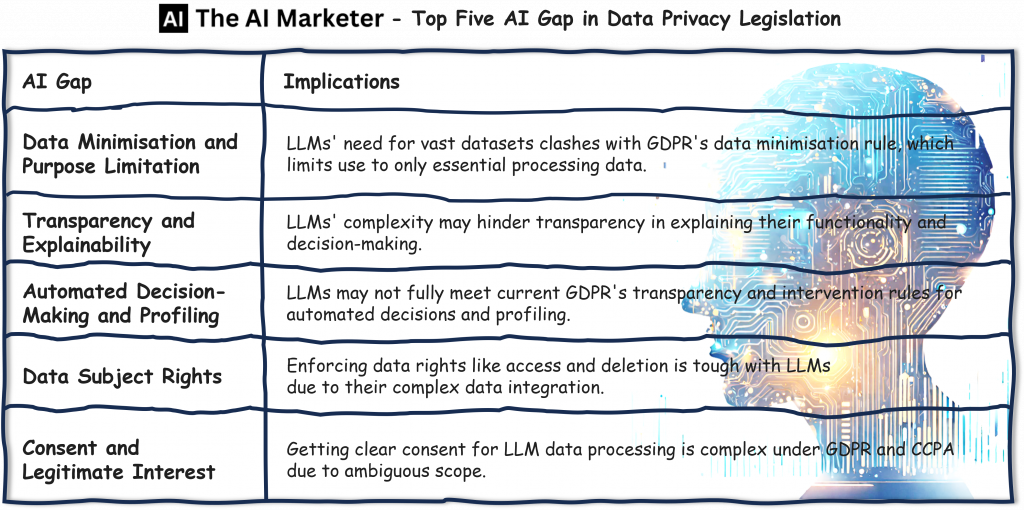

The gap between privacy legislation like GDPR in the EU and CCPA in the United States, and the use of customer data being processed by LLMs like ChatGPT, primarily revolves around the specific challenges posed by the nature of LLMs.

The AI Gap in Data Privacy Legislation

These challenges highlight the AI gap where the key principles of privacy legislation are no longer sufficient:

- Data Minimisation and Purpose Limitation: LLMs require large datasets to train and operate effectively. This need conflicts with the data minimisation principle of the GDPR, which dictates that only data necessary for the specific purposes of processing should be used. Similarly, CCPA emphasises consumers’ rights to know about and control the personal information a business collects about them and how it is used. However, how can we safeguard from data breaches resulting, directly or indirectly, if huge amounts of Personally Identifiable Information (PII) enter and train public Large Language Models? Would legislation alone be enough? After all, the legislation does not stop data breaches occurring in the first instance.

- Transparency and Explainability: Both GDPR and CCPA emphasise transparency. However, the complexity of LLMs can make it difficult to explain how they work and how decisions are made, potentially affecting the ability to meet these transparency requirements.

- Automated Decision-Making and Profiling: GDPR has specific provisions on automated decision-making and profiling that require transparency, the right to obtain human intervention, and the right to challenge decisions. LLMs, especially when used for decision-making or profiling, might struggle to meet these requirements.

- Data Subject Rights: Under GDPR and CCPA, individuals have rights regarding their data, such as the right to access, the right to be forgotten, and the right to rectification. Implementing these rights can be challenging when dealing with LLMs, especially when data is integrated into complex models.

- Consent and Legitimate Interest: Obtaining explicit consent for data processing under GDPR (and similar provisions under CCPA) can be complicated when using LLMs, as the scope of the data processing might not be fully clear to the data subjects.

- Bias and Discrimination: There are concerns about bias in AI systems. Both GDPR and CCPA, while not addressing AI bias directly, have provisions that could apply to discriminatory outcomes resulting from biased AI systems.

- International Data Transfers: GDPR has strict rules on transferring data outside the EU. LLMs often operate on a global scale, and ensuring compliance with these data transfer rules can be challenging.

- Security and Data Breach Risks: The use of large datasets in LLMs can increase the risk of data breaches. Both GDPR and CCPA have stringent requirements for data security and breach notification.

Therefore, there is an “AI Gap” that lies in the ability of current privacy legislation to fully address the unique challenges posed by the advanced and often opaque nature of LLMs.

What are the risks of using AI without complying with data privacy legislation?

There’s a growing societal expectation for ethical data use. Failure to meet these expectations can lead to public backlash. It is clear from the AI Gap that organisations and marketers who look to obtain first-mover advantage without proper thought and care for customer privacy carry real and present risks for organisations under existing privacy laws.

Consider the surge of Chat GPT plugins and user-generated GPTs, where we have limited knowledge about their data use from prompts, developer identity, and the eventual destination of this data.

We can categorise these risks as follows:

Financial Risk:

- Data Breach and Non-Compliance Penalties: Failure to comply with data protection laws like GDPR, CCPA, or others can lead to substantial fines. For instance, under GDPR, fines can reach up to 4% of annual global turnover or €20 million (whichever is greater).

- Litigation Risks: Non-compliance can also lead to legal action from individuals or groups affected by data privacy violations.

- Cost of Data Breaches: Beyond fines, data breaches can incur costs like forensic investigations, customer notification, and credit monitoring services for affected individuals.

- Increased Insurance Premiums: Companies with poor data practices may face higher premiums for cybersecurity insurance.

Reputational Risks

- Loss of Consumer Trust: Mishandling customer data can lead to a loss of trust. This can be difficult and costly to rebuild. Trust is a key component of customer loyalty and retention. What’s more, if your processes for retaining customer data are already in poor shape and bad practice leads to your organisation holding out-of-date, misleading or incorrect data, should this data find its way into the LLM, you risk causing individuals reputational risk and incorrect data is difficult to purge.

- Brand Damage: Incidents of data misuse or breaches can cause lasting damage to a brand’s reputation.

- Negative Publicity: Data privacy issues often attract media attention, leading to negative publicity.

Technical and Operational Risks

- Corporate Security: Not only is your customers’ privacy and security at risk if PII enters the LLM powering the AI tools you use, but so is the security of your organisation which could be left vulnerable. Bad actors are as much users of AI as you are, probably more so. They will probe platforms like ChatGPT to extract data potentially harmful to corporations or individuals. They possess the expertise to prompt AI into divulging sensitive information such as PII, system access, or intellectual property for exploitation.

- Resource Drain: Addressing privacy issues, especially after a breach or non-compliance, can consume significant organisational resources, including time, personnel, and technology.

- Operational Disruptions: Responding to data breaches or legal actions can disrupt normal business operations.

Companies know that poor data handling may result in loss of business opportunities, as partners and customers seek more trustworthy alternatives. Publicly traded companies might see a decline in stock prices following significant privacy incidents and customers may choose to take their business elsewhere if they feel their data is not being treated with the care it deserves.

What can be done to minimise the risk of AI invading privacy?

To prevent Personally Identifiable Information from inadvertently entering LLMs like ChatGPT and being used in responses, several layers of safeguards and best practices should be implemented. These measures aim to protect individual privacy and comply with data protection laws. There is a responsibility for implementing safeguards to protect PII in the context of Large Language Models (LLMs). This generally falls on both the operators of the LLMs and the users or organisations that implement them.

LLM Operators

LLM operators have a primary responsibility to ensure that their systems are designed and operated in a way that respects privacy and complies with relevant data protection laws. Their responsibilities include:

- Data Handling and Processing: Implementing robust data collection, processing, and storage practices to ensure that PII is handled securely and lawfully.

- Model Training: Ensuring that the data used for training the LLMs is appropriately anonymised or pseudonymised and that the models do not retain or reproduce PII from the training data in their outputs.

- Security Measures: Providing strong security measures to protect against unauthorised access to, or misuse of the LLMs and the data they process.

- Transparency and Accountability: Being transparent about how the LLMs work, the data they use, and the measures in place to protect privacy.

- Providing Mechanisms for Control and Reporting: Offering users ways to report and remove PII, and control over how their data is used.

- Engage with Local Regulators: Conducting a proactive assessment which identifies privacy risks and engaging with local regulators to address these risks in advance of operation. OpenAI apparently rolled ahead and launched ChatGPT in Europe without engaging with local regulators which could have ensured it avoided falling foul of the bloc’s privacy rulebook.

Marketers and Organisations

Marketers and organisations that employ LLMs for various applications also bear responsibility. Especially when they input data into these models or use the outputs for decision-making. It is generally acknowledged and forecast that 90% of data breaches in 2024 will feature some kind of human element. They should ensure the following safeguards:

- Know your LLMs Privacy Policy: Know your LLMs Enterprise Privacy Policy as part of any vendor approval process. For example, Google asserts that Bard training data does not include private information, their privacy policy allows for some data collected during interactions to be used for improving Google products and services. Understanding these limitations is crucial.

- Data Mapping and Inventory: Maintain a detailed inventory of the data being processed through any AI tools. This includes understanding what data is collected, where it comes from, and how it is used. This mapping is crucial for responding to data subject requests, ensuring compliance, and should already be best practice in any organisation processing customer data.

- Responsible Use: Ensuring that employees use LLMs in a manner that respects privacy and does not involve the input of PII without proper consent or legal basis. Organisations should be clear and inform staff what they can and can’t use AI tools and LLMs for. Write this into the staff handbook.

- Compliance with Privacy Policies: Adhering to company privacy policies and relevant data protection laws in the way LLMs are used. Policies should be created that incorporate processes to protect employees.

- Role-based controls: Restrict access to authorised employees who need it for specific tasks and limit permissions to specific functionalities if platform controls allow. Features like this may only be available via Enterprise accounts. Although Enterprise licences can cost more than personal user licences, this extra protection is worth it and necessary.

- Data segregation: If possible, create separate instances or projects for different departments or teams, preventing cross-contamination of data. However, this can sometimes be detrimental to initiatives such as creating a 360 view of customers. The secret of how these two requirements can live harmoniously is in the practices and platforms used. Choose wisely, not cheaply.

- Opt out: Some platforms allow organisations to opt out of any data input being used to train models further. For example, employee use of Chat GPT in an organisation might be dependent on the user following the process to opt out of data sharing. This can be done by visiting OpenAI’s Privacy Portal. Alternatively, upgrading to the new ChatGPT Team or Enterprise licences will exclude your data from model training by default.

- Awareness and Training: Ensuring that users are aware of the privacy implications and trained in responsible use of LLMs.

- Monitoring Outputs: Regularly review the outputs from LLMs for any potential privacy issues, especially if used in sensitive contexts.

Shared Responsibility for Data Privacy in AI

In many cases, the responsibility is shared. LLM operators must provide tools and frameworks that facilitate privacy protection. Users and organisations must use these tools responsibly and in compliance with legal requirements. The concept of “privacy by design and by default”, a key principle in data protection laws like GDPR, suggests that privacy safeguards should be an integral part of the technology’s design and operation. This is a shared responsibility of both developers and users.

What does the future of data privacy in AI hold?

So, the upshot is that employee education, organisational safeguards and further legislation will form the solution to minimising customer privacy risks and destabilising customer security integrity. There are also technological developments that could help. Could it be possible that AI itself could help govern and safeguard against the risks specified?

Causal AI is a strand of AI. It focuses on understanding the cause-and-effect relationships rather than just correlations. It can be a powerful tool in controlling and managing the use of customer data in Large Language Models (LLMs). Here’s how causal AI can be applied in this context:

- Understanding Data Dependencies: Causal AI can help in understanding how different pieces of data are interconnected and how changes in one aspect of the data might affect the rest. This understanding is crucial for data minimisation, ensuring that only necessary data is used in LLMs.

- Improving Data Privacy in AI: By understanding the causal relationships in data, it’s possible to identify which data elements are critical for the LLM’s performance and which can be omitted or anonymised without significantly impacting the model. This approach aids in respecting privacy while maintaining functionality.

- Enhancing Transparency and Explainability: Causal AI can contribute to making LLMs more transparent and explainable. By understanding the causal relationships in the model’s decision-making process, it becomes easier to explain how and why certain decisions are made. This is essential under GDPR’s right to explanation. It enables such decisions to be audited making it possible to trace back and review how a particular decision was reached. This is crucial for accountability.

- Data Processing and Consent Management: Causal AI can help in mapping out the effects of processing specific types of data, aiding in more informed consent processes. Users can be informed about the specific impact of their data being processed, leading to more meaningful consent.

- Compliance with Data Subject Requests: Causal AI can assist in responding to data subject requests (like access, erasure, or rectification). By understanding how data flows through the LLM and its causal importance, it becomes easier to address these requests effectively.

- Bias Detection and Mitigation: Causal AI can be used to detect and understand biases in LLMs. By analysing the causal pathways, it can reveal how biases are introduced and propagated in the model. This allows for targeted interventions to mitigate these biases.

- Optimising Data Collection Strategies: By understanding the causal impacts of different data points, organisations can optimise their data collection strategies, focusing on collecting data that has the most significant and relevant impact on the LLM’s performance.

- Risk Assessment and Management: Causal AI can be instrumental in conducting Data Protection Impact Assessments (DPIAs). It provides a deeper understanding of how data processing activities affect privacy and compliance risks.

- Dynamic Data Management: Causal AI can enable dynamic data management policies where data usage and processing are continuously adjusted based on causal insights, ensuring optimal balance between utility and privacy.

Google services are incorporating and becoming increasingly reliant on AI. Forthcoming examples include the introduction of Google’s Generative Search Experience and the retirement of third-party cookies. This exacerbates and heightens the risk of customer data being exposed to the public domain should it find its way into these AI tools. Not only in the collection and processing of customer data but also in the output of results which might surface PII without the intention or consent.

There are many initiatives to replace third-party cookies, including:

- Google Privacy Sandbox: Announced in 2019, Google responded to growing concerns about user privacy online and the challenges posed by third-party cookies for both users and businesses. Privacy Sandbox is a set of proposals for new web standards. It aims to give users more control over their privacy online. One of the key proposals in Privacy Sandbox is the Federated Learning of Cohorts (FLoC) API. This would allow websites to group users into cohorts based on their browsing history without revealing individual user data to third parties. In September 2023, several Privacy Sandbox APIs, including the FLoC’s successor Topics, reached general availability in Chrome, marking a significant step towards implementing the initiative.

- Unified ID 2.0: While officially launched in 2023, Unified ID 2.0 (UID2) has roots in several earlier initiatives. Unified ID 2.0 is a proposed industry-wide standard for identifying users without using third-party cookies. It is being developed by the Digital Advertising Alliance (DAA). It is an open-source initiative, fostering broader industry-wide adoption and collaboration. Focused on privacy it emphasises user consent and control over data sharing and uses hashed email addresses or phone numbers, never revealing actual user data. Supporting multiple identity providers it allows publishers and advertisers to choose their preferred provider.

- Novatiq is a solution looking to enable telecom companies to replace 3rd party cookies without identifying customers. They do this by leveraging telco data for privacy-focused, targeted advertising, empowering advertisers while respecting user control. Unlike third-party cookies, the Novatiq solution doesn’t directly identify individual customers across platforms. Instead, it focuses on building audience segments based on aggregated and anonymised data like app downloads, location insights, and demographic trends to understand subscriber behaviour without identifying individuals.

It is still too early to say which of these solutions will ultimately replace third-party cookies. However, the web advertising industry is moving away from third-party cookies and towards more privacy-preserving solutions.

Ethics of AI: In the hands of the marketer

It is fascinating how AI has turbocharged the landscape of marketing so quickly. Allowing us to connect with consumers on a more deeply personal level. But the thought-provoking question is: How do we harness this powerful tool ethically and responsibly? It’s a balancing act, really – marrying the benefits of marketing innovation with the solemn commitment to consumer privacy. This isn’t just about moral obligations; it’s a strategic move that could redefine the future of marketing.

As we navigate this new world, the key for marketers is to champion the cause of data privacy in AI and stick firmly to ethical guidelines in AI usage, creating them where maybe existing privacy legislation falls short.

Imagine creating a marketing ecosystem that’s not only effective but also built on the foundation of trust and respect for consumer privacy. By doing so, we’re not just earning customer loyalty; we’re also aligning with the values of today’s aware and socially conscious consumers. In this new era, ethics in marketing isn’t a roadblock; it’s weaponised, a competitive edge that sets us apart in the tech-savvy marketplace. Let’s embrace this challenge and lead the way in ethical AI-driven marketing!

Marketing FAQs: Data Privacy in AI

Q: Has anybody been fined for data breaches that involve the use of AI?

A: One of the first cases in Europe is that of the case between US-company Clearview AI, and the UK’s Information Commissioner’s Office (ICO). Clearview AI is a facial recognition platform, that uses AI to scrap facial images of people from their public profiles.

The ICO issued an enforcement notice in May 2022, requiring Clearview AI to delete the personal data of UK individuals collected through the use of its facial recognition technology and held in its database. This was accompanied by a £7.5 million fine for several alleged violations under the EU and UK General Data Protection Regulations (“GDPR”). This fine has subsequently been blocked by the UK Courts and remains in appeal. The Information Commissioner stated ‘whilst my office supports businesses that innovate with AI solutions, we will always take the appropriate action to protect UK people when we believe their privacy rights are not being respected’.

Q: What’s the debate about data privacy in AI?

A: This is a topic of global consequence. World leaders are asking whether to put guardrails in place for AI itself. Or look to regulate its effects once the tech is developed. It’s about two approaches: proactive guardrails (stricter rules upfront) vs. reactive regulation (fixing problems after they happen). Both have pros and cons for marketers.

Q: What could proactive guardrails mean for marketers?

A: More rules on data privacy in AI, fairness in algorithms, and transparency in the use of AI. This could mean adapting data practices, explaining how AI works, and focusing on ethical marketing.

Q: What if regulations come later instead?

A: Unclear rules could create uncertainty and legal risks. Bad AI use could hurt your brand’s reputation. You might have to scramble to adapt to new regulations later, hurting campaign performance.

Q: So, what should marketers do?

A: Get familiar with AI and its ethical considerations. Invest in technology for responsible AI and data practices. Build trust with customers by being transparent about AI use. Get involved in shaping AI regulations to make them practical.

Q: What is the European Union’s AI Act?

A: The European Union’s AI Act, which was provisionally agreed upon by the European institutions, marks a significant milestone as the world’s first comprehensive law on artificial intelligence. Expected to become EU law in early 2024, this groundbreaking legislation sets standards for AI regulation globally. The AI Act covers a wide range of AI systems, emphasizing transparency and accountability. The Act prohibits certain AI practices, such as emotion-recognition software, and mandates an ethical framework for AI development. Consumers gain rights, including being informed about high-risk AI decisions and the ability to complain to authorities. Overall, the EU AI Act aims to balance innovation with safeguards for privacy, fairness, and societal well-being.

Q: Will the European Union’s AI Act impact marketers?

A: The European Union’s AI Act will indeed have implications for marketers. Here are the key points relevant to marketing:

- Transparency and Accountability: Marketers using AI systems, especially those considered high-risk (such as personalized advertising algorithms), will need to ensure transparency. Consumers must be informed when AI decisions impact them. Marketers should be prepared to explain how their AI models work and provide clear information about data usage.

- Ethical Considerations: The Act emphasizes ethical AI development. Marketers must avoid discriminatory practices, bias, and unfair targeting. Ensuring that AI-driven marketing campaigns align with fundamental rights and societal values will be crucial.

- Impact on Personalization: While the Act aims to protect consumer privacy, it may impact personalized marketing efforts. Marketers will need to strike a balance between delivering relevant content and respecting user privacy rights.

- Compliance and Audits: Marketers operating within the EU will need to comply with the Act’s requirements. Regular audits and assessments of AI systems will be necessary to ensure adherence to guidelines.

What AI was used for this post?

- Chat GPT 4.0 was used to write the body of this article.

- Research into Third-Party Cookie replacements was conducted via Google Bard, including this prompt and response on Google Privacy Sandbox

- Research into the European Union’s AI Act was conducted using Microsoft Copilot

- Page image created by ‘AI Marketer Staff Writer GPT’